What is Alert Fatigue?

This is called alert fatigue where engineers especially DevOps SREs and on-call teams become numb to the many notifications from their monitoring tools. Instead of quickly responding to important issues, engineers may start ignoring or missing crucial alerts due to the overwhelming number of notifications they receive. That is no small issue because that causes slower incident response times, possible service outages, and less reliability.

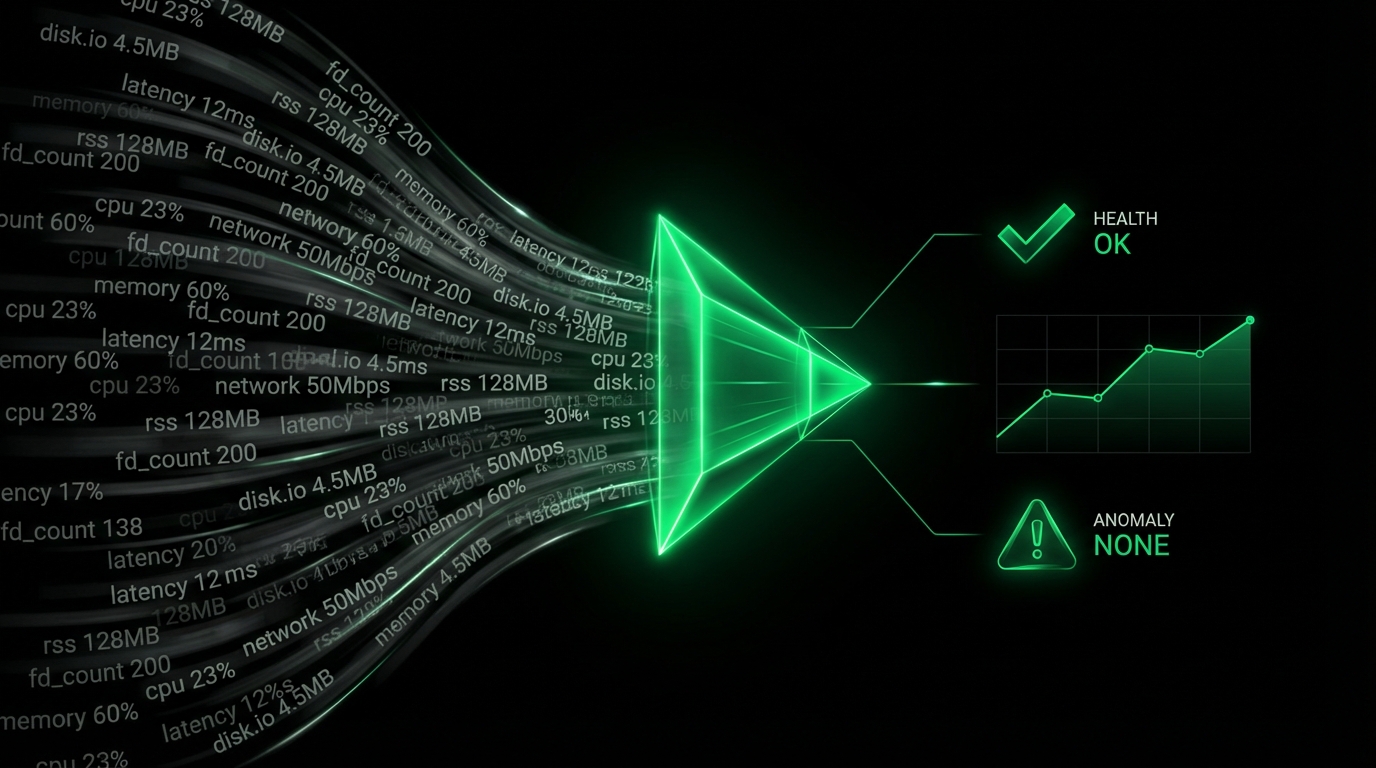

Robust monitoring is a must-have in the high-speed world of cloud infrastructure and microservices. However, when every little spike sets off an alarm, it is hard to separate the wheat from the chaff, so to speak. So in the end, alerts lose their meaning and it becomes a society of ignoring alarms, even when they shouldn’t be ignored.

Why is Alert Fatigue a Problem?

Alert fatigue has several consequences that impact both individuals and organizations:

- Reduced Response Time: With too many alerts, engineers often delay response, assuming it’s another non-critical issue.

- Desensitization: When engineers are exposed to constant noise, they may ignore even valid alerts, leading to critical incidents being missed.

- Burnout: Being on-call already comes with stress, but alert fatigue can significantly contribute to mental exhaustion and burnout.

- Increased Downtime: If critical issues are not attended to promptly, downtime can increase, leading to revenue loss, damaged reputation, and frustrated users.

Common Causes of Alert Fatigue

- Too Many Alerts: When monitoring systems are not fine-tuned, even small or routine issues generate alerts, overwhelming teams.

- Lack of Prioritization: When alerts are not categorized by severity, it’s hard to distinguish between minor warnings and major incidents.

- Irrelevant Alerts: Alerts for non-critical systems or issues unrelated to the on-call engineer’s responsibilities contribute to noise.

- Unclear or Duplicate Alerts: Multiple systems sending alerts for the same issue can create confusion and redundancy.

- Misconfigured Thresholds: Thresholds that are too sensitive or improperly set can cause systems to trigger alerts unnecessarily.

How to Avoid Alert Fatigue

Avoiding alert fatigue requires a combination of thoughtful configuration, automation, and human-centric strategies. Here are some key techniques to minimize the issue:

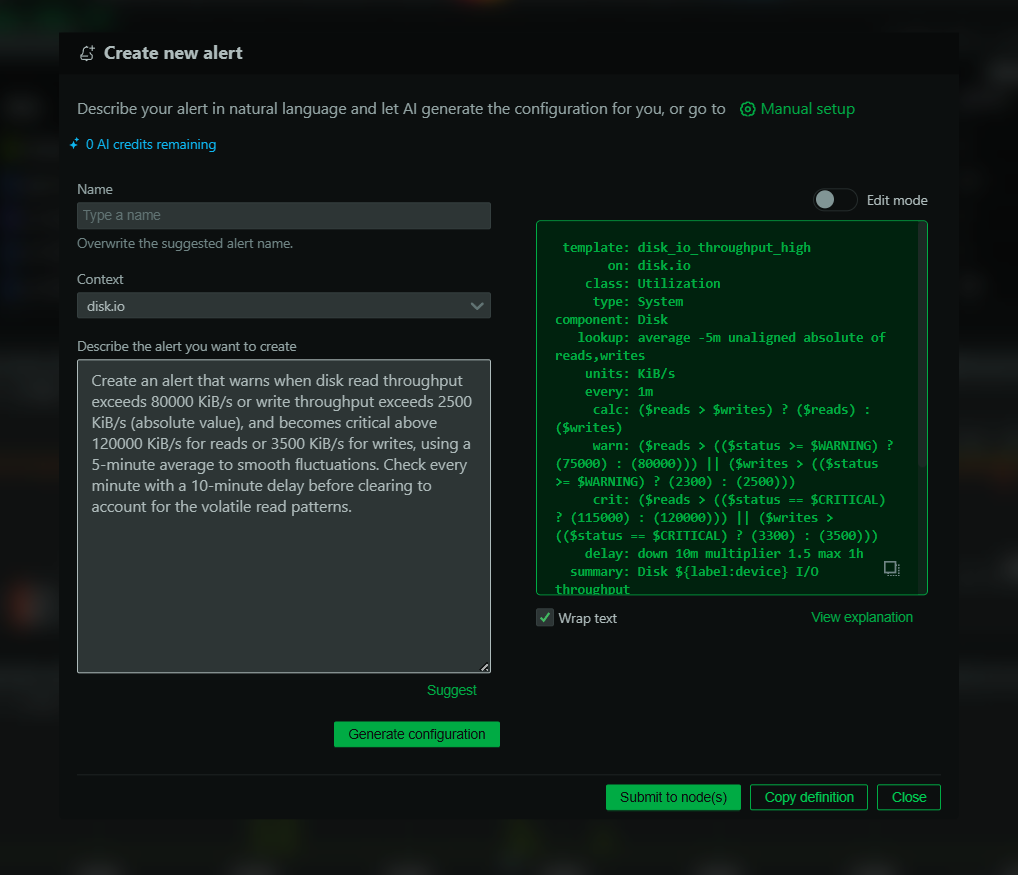

1. Optimize Alerting Rules

The best way to combat alert fatigue is to tune your alert rules. Constantly examine and change the threshold levels so only serious problems, the ones that need immediate attention, will trigger the alarms.

- Use Dynamic Thresholds: Instead of static limits, implement dynamic thresholds that adapt based on historical data. For instance, if CPU usage spikes at certain times of the day, configure alerts to trigger only when usage is significantly higher than the usual pattern.

- Adjust Sensitivity: For non-critical systems or services, consider increasing the sensitivity threshold, so the system doesn’t trigger alerts for minor, expected deviations.

2. Categorize Alerts by Priority

All alerts are not created equal. By categorizing alerts into different levels of priority, teams can better focus on the most pressing issues.

- Critical Alerts: Issues that require immediate human intervention (e.g., service outages, major performance degradation).

- Warning Alerts: Non-urgent issues that could escalate if left unattended (e.g., disk space usage nearing capacity).

- Informational Alerts: Data points that are useful to monitor but don’t require immediate action (e.g., periodic system health checks).

Action Step: Apply different notification methods for different categories. For example, critical alerts could trigger SMS and phone calls, while warnings only send emails or messages in chat systems like Slack.

3. Consolidate Duplicate Alerts

Many teams use a wide range of monitoring tools, which often results in duplicate alerts for the same issue. Consolidating these duplicates can drastically reduce noise.

- Alert Aggregation: Use alert aggregation tools that consolidate notifications from different sources into a single, unified alert. This way, teams only deal with one incident alert instead of multiple ones from different systems.

- Correlation Alerts: Implement correlation logic to group related alerts together. For example, if a network issue triggers multiple services to go down, group those alerts under a single incident.

4. Implement Escalation Policies

Not all alerts need to go to the same team, or even the same person. Implementing escalation policies can ensure that alerts only reach the right people at the right time.

- Assign Alerts to the Right Teams: Ensure that each alert is routed to the team responsible for that specific service or system. Irrelevant alerts are a major contributor to alert fatigue.

- Escalation Tiers: Create escalation policies where alerts are first routed to a primary responder. If the issue is not resolved within a specific time window, it escalates to a senior engineer or manager.

5. Leverage Automation and Self-Healing Systems

Automating responses to common alerts can dramatically reduce the volume of notifications requiring human intervention.

- Automated Remediation: Implement scripts or tools that automatically handle predictable, low-level issues. For example, if a service goes down, an automated script could restart it without human involvement.

- Self-Healing Infrastructure: Modern infrastructure (e.g., Kubernetes) can automatically scale or redeploy failing services, eliminating the need for human intervention in certain situations.

6. Set Clear Runbooks and Documentation

Even for the alerts that need actual human intervention, having well-defined runbooks takes away a lot of the pressure of trying to figure out what to do in the middle of the alert. On-call engineers are able to troubleshoot incidents quickly, and with confidence when they have a step-by-step guide to follow.

- Runbook Standardization: Make sure every alert type has its own runbook describing the troubleshooting steps and possible solutions.

- Onboarding New Engineers: And have your new engineers use runbooks during onboarding so they know what some common problems are and how to fix them.

7. Regularly Review and Tune Alerts

Alert rules should not be static. As your infrastructure and services evolve, so too should your monitoring and alerting configurations.

- Monthly or Quarterly Reviews: Set up regular intervals to review alert thresholds, rules, and notifications. Use past incidents to guide changes—if certain alerts were consistently ignored, consider revising or eliminating them.

- Incorporate Feedback: Encourage on-call engineers to provide feedback on which alerts are useful and which are noise. This feedback loop helps keep your alerting system sharp and effective.

Wrapping Up: Focus on Signal, Not Noise

Alert fatigue, as they so eloquently call it, is the result of too much noise, not enough signal. Through the use of optimized alert rules, notification categorization, duplicate consolidation, and automation where possible, one can implement a much more manageable and efficient monitoring system. Keep in mind that the purpose of monitoring is to bring up relevant, useful information, not to bury your team with every minute blip.

So by doing these things your team will feel less stressed, respond to incidents more quickly, and never miss a serious alert again.

To delve deeper into alert management, read Google’s site reliability engineering books or use Netdata’s monitoring solution, which offer sophisticated alerting capabilities designed for high-performance teams.