For a tool like Netdata, monitoring crashes and abnormal events extends far beyond bug fixing—it’s essential for identifying edge cases, preventing regressions, and delivering the most dependable observability experience possible. With millions of daily downloads, each event provides a vital signal for maintaining the integrity of our systems.

The Challenge with Traditional Solutions

Over the years, we’ve evaluated many monitoring tools, each with significant limitations:

| Tool | Strengths | Limitations |

|---|---|---|

| Sentry | • Comprehensive error tracking features • Detailed stack traces | • Per-event pricing model becomes prohibitive at scale • Forces sampling which reduces visibility into critical issues • Compromises complete error capture for cost control |

| GCP BigQuery & Similar | • Powerful query capabilities • Flexible data processing • High scalability potential | • Complex reporting setup and maintenance • Significant costs at high event volumes • Requires specialized technical expertise |

| Other Solutions | • Various specialized features • Some open-source flexibility | • Either too inflexible for custom requirements • Or prohibitively expensive at full-capture scale • Often require compromising between detail and cost |

We consistently encountered these core challenges:

- Customization Difficulties: Off-the-shelf tools treat annotations as special cases, often missing the flexibility required for deep analysis.

- High Costs: A per-event pricing model can become prohibitively expensive at scale.

- Effortful Reporting: Generating nuanced, detailed reports required a lot of manual intervention and often didn’t capture the full complexity of our system’s behavior.

Identifying the Requirements

For Netdata, we required a solution offering:

- Comprehensive Ingestion: A system capturing every event without sampling, preserving all critical details.

- Complete Customization: A flexible data structure that supports multidimensional analysis across all fields and parameters.

- Economic Scalability: A framework that expands without triggering prohibitive operational expenses.

- Superior Performance: Capacity to handle tens of thousands of events per second while maintaining optimal processing speed.

Our Solution: Simple, Powerful, and Efficient

After reviewing traditional options, we discovered an innovative approach that leverages existing infrastructure. Our solution transforms event monitoring through systemd’s journal:

| Requirement | Implementation | Benefit |

|---|---|---|

| Zero Sampling | Complete event capture | Every single agent event is preserved, providing full visibility into system behavior |

| Flexible Data Structure | Systemd journal field mapping | Supports multidimensional analysis across all fields and parameters |

| Cost-Effective Scaling | Utilization of existing infrastructure | Eliminates licensing costs while maintaining high performance |

| Exceptional Performance | Lightweight processing pipeline | Efficiently handles up to 20,000 events per second per instance with horizontal scaling capability |

Core Mechanism: Agent Status Tracking

The implementation required minimal development effort because we realized we already had all the necessary parts!

Each Netdata Agent records its operational status to disk, documenting whether it exited normally or crashed—and if crashed, capturing detailed diagnostic information. Upon restart, the Agent evaluates this status file to determine reporting requirements. When anonymous telemetry is enabled or a crash is detected, the Agent transmits this status to our agent-events backend. An intelligent deduplication system prevents redundant reporting, ensuring each unique event is logged only once per day per Agent.

Backend Processing Pipeline

The agent-events backend performs the crucial transformation:

First, a lightweight Go-based web server receives JSON payloads via HTTP POST and sends them to stdout. We selected this implementation for its exceptional performance characteristics after evaluating several options (including Python and Node.js alternatives).

Click to see the JSON payload of a status event...

{

"message": "Netdata was last crashed while starting, because of a fatal error",

"cause": "fatal on start",

"@timestamp": "datetime",

"version": 8,

"version_saved": 8,

"agent": {

"id": "UUID",

"ephemeral_id": "UUID",

"version": "v2.2.6",

"uptime": 20,

"ND_node_id": null,

"ND_claim_id": null,

"ND_restarts": 55,

"ND_profile": [

"parent"

],

"ND_status": "initializing",

"ND_exit_reason": [

"fatal"

],

"ND_install_type": "custom"

},

"host": {

"architecture": "x86_64",

"virtualization": "none",

"container": "none",

"uptime": 1227119,

"boot": {

"id": "04077a68-8296-4abf-bd77-f20527bb5cde"

},

"memory": {

"total": 134722347008,

"free": 51673772032

},

"disk": {

"db": {

"total": 3936551493632,

"free": 1999957258240,

"inodes_total": 244170752,

"inodes_free": 239307909,

"read_only": false,

"name": "Manjaro Linux",

"version": "25.0.0",

"family": "manjaro",

"platform": "arch"

},

"fatal": {

"line": 0,

"filename": "",

"function": "",

"message": "Cannot create unique machine id file '/var/lib/netdata/registry/netdata.public.unique.id'",

"errno": "13, Permission denied",

"thread": "",

"stack_trace": "stack_trace_formatter+0x196\nlog_field_strdupz+0x237\nnd_logger_log_fields.constprop.0+0xc4\nnd_logger.constprop.0+0x597\nnetdata_logger_fatal+0x18d\nregistry_get_this_machine_guid.part.0+0x283\nregistry_get_this_machine_guid.constprop.0+0x20\nnetdata_main+0x264c\nmain+0x2d __libc_init_first+0x8a\n__libc_start_main+0x85 _start+0x21"

},

"dedup": [

{

"@timestamp": "2025-03-01T12:23:43.61Z",

"hash": 15037880939850199034

}

]

}

}

}

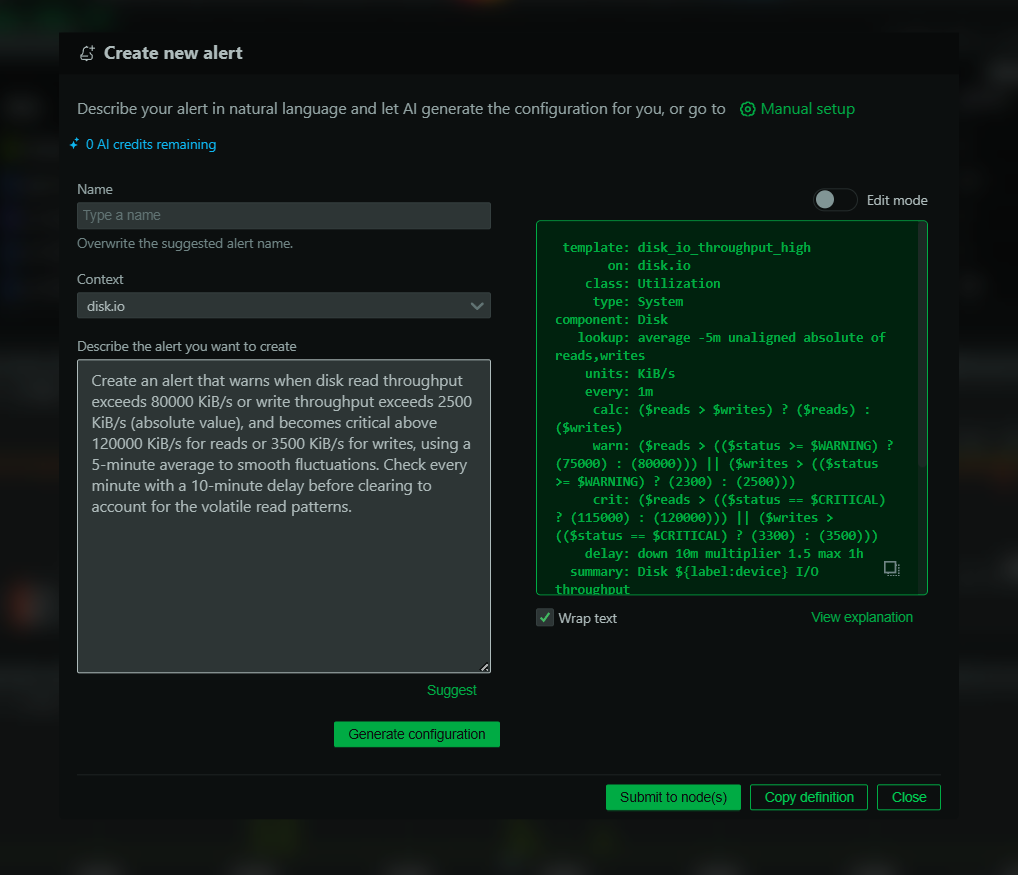

Our processing pipeline uses this simple sequence:

web-server | log2journal json | systemd-cat-native

Both log2journal and systemd-cat-native are included with Netdata, making this solution immediately available to all users.

Data Transformation and Storage

The pipeline transforms complex nested JSON structures into flattened journal entries. Regardless of field count or nesting depth, log2journal expands every data point into a discrete journal field, preserving complete information while enabling powerful querying.

To keep system logs clean, we isolate this process in a dedicated systemd unit with its own log namespace, providing operational separation and preserving analytical power.

Click to see the mapping of the JSON fields into systemd journald entries...

| Original JSON Key | Journald Key Generated | Reason for Tracking in Netdata |

|---|---|---|

message | MESSAGE | A high level description of the reason the agent restarted. |

cause | AE_CAUSE | A shorter code indicating the reason the agent restarted. One of fatal and exit, exit on system shutdown, exit to update, exit and updated, exit instructed, abnormal power off, out of memory, disk read-only, disk full, disk almost full, fatal on start, killed hard on start, fatal on exit, killed hard on shutdown, killed hard on update, killed hard on exit, killed fatal, killed hard. |

@timestamp | AE__TIMESTAMP | Captures the exact time the status file was saved. |

version | AE_VERSION | The schema version of the posted status file. |

version_saved | AE_VERSION_SAVED | The schema version of the saved status file. |

agent.id | AE_AGENT_ID | The MACHINE_GUID of the agent. |

agent.ephemeral_id | AE_AGENT_EPHEMERAL_ID | The unique INVOCATION_ID of the agent. |

agent.version | AE_AGENT_VERSION | The version of the agent. |

agent.uptime | AE_AGENT_UPTIME | The time the agent was running when the status file was generated. |

agent.ND_node_id | AE_AGENT_NODE_ID | The Netdata Cloud Node ID of the agent. |

agent.ND_claim_id | AE_AGENT_CLAIM_ID | The Netdata Cloud Claim ID of the agent. |

agent.ND_restarts | AE_AGENT_RESTARTS | The number of restarts the agent had so far. A strong indication for crash loops. |

agent.ND_profile | AE_AGENT_ND_PROFILE_{n} | The configuration profile of the agent. |

agent.ND_status | AE_STATUS | The operational status of the agent (initializing, running, exiting, exited). |

agent.ND_exit_reason | AE_AGENT_ND_EXIT_REASON_{n} | Records the reason for the agent’s exit, one or more of the following: signal-bus-error, signal-segmentation-fault, signal-floating-point-exception, signal-illegal-instruction, out-of-memory, already-running, fatal, api-quit, cmd-exit, signal-quit, signal-terminate, signal-interrupt, service-stop, system-shutdown, update |

agent.ND_install_type | AE_AGENT_INSTALL_TYPE | Indicates the installation method (e.g., custom, binpkg); different installs may behave differently under error conditions. |

host.architecture | AE_HOST_ARCHITECTURE | Provides the system architecture, which is useful for reproducing environment-specific issues. |

host.virtualization | AE_HOST_VIRTUALIZATION | Indicates if the system is running under virtualization; such environments can have unique resource or timing constraints affecting stability. |

host.container | AE_HOST_CONTAINER | Specifies if the host is containerized, which is critical when diagnosing errors in containerized deployments. |

host.uptime | AE_HOST_UPTIME | Reflects the host’s overall uptime, allowing correlation between system stability and agent crashes. |

host.boot.id | AE_HOST_BOOT_ID | A unique identifier for the current boot session—helpful for correlating events across system reboots. |

host.memory.total | AE_HOST_MEMORY_TOTAL | Shows the total available memory; important for diagnosing if resource exhaustion contributed to the fatal error. |

host.memory.free | AE_HOST_MEMORY_FREE | Indicates available memory at crash time, which can highlight potential memory pressure issues. |

host.disk.db.total | AE_HOST_DISK_DB_TOTAL | Reflects the total disk space allocated for database/log storage; issues here might affect logging during fatal errors. |

host.disk.db.free | AE_HOST_DISK_DB_FREE | Shows available disk space; low disk space may hinder proper logging and recovery following a fatal error. |

host.disk.db.inodes_total | AE_HOST_DISK_DB_INODES_TOTAL | Provides the total inodes available, useful for diagnosing filesystem constraints that could contribute to system errors. |

host.disk.db.inodes_free | AE_HOST_DISK_DB_INODES_FREE | Indicates the number of free inodes; running out of inodes can cause filesystem errors that affect netdata’s operation. |

host.disk.db.read_only | AE_HOST_DISK_DB_READ_ONLY | Flags if the disk is mounted read-only—this may prevent netdata from writing necessary logs or recovering from errors. |

os.type | AE_OS_TYPE | Defines the operating system type; critical for understanding the context in which the error occurred. |

os.kernel | AE_OS_KERNEL | Provides the kernel version, which can be cross-referenced with known issues in specific kernel releases that might lead to fatal errors. |

os.name | AE_OS_NAME | Indicates the operating system distribution, which helps in narrowing down environment-specific issues. |

os.version | AE_OS_VERSION | Shows the OS version, essential for linking the fatal error to recent system updates or known bugs. |

os.family | AE_OS_FAMILY | Groups the OS into a family (e.g., linux), aiding in high-level analysis across similar systems. |

os.platform | AE_OS_PLATFORM | Provides platform details (often rewritten to include the OS family), key for identifying platform-specific compatibility issues that might cause crashes. |

fatal.line | CODE_LINE | Pinpoints the exact line number in the source code where the fatal error occurred—vital for debugging and tracking down the faulty code. |

fatal.filename | CODE_FILE | Specifies the source file in which the fatal error was triggered, which speeds up code review and pinpointing the problem area. |

fatal.function | CODE_FUNC | Identifies the function where the fatal error occurred, providing context about the code path and facilitating targeted debugging. |

fatal.message | AE_FATAL_MESSAGE | Contains the detailed error message; essential for understanding what went wrong and the context surrounding the fatal event (and later merged into the main message field). |

fatal.errno | AE_FATAL_ERRNO | Provides an error number associated with the failure, which can help in mapping the error to known issues or system error codes. |

fatal.thread | AE_FATAL_THREAD | Indicates the thread in which the error occurred, important in multi-threaded scenarios to help isolate concurrency issues. |

fatal.stack_trace | AE_FATAL_STACK_TRACE | Contains the stack trace at the point of failure—a critical asset for root cause analysis and understanding the chain of function calls leading to the error. |

dedup[].@timestamp | AE_DEDUP_{n}__TIMESTAMP | Records the timestamp for each deduplication entry; used to filter out duplicate crash events and correlate error clusters over time. |

dedup[].hash | AE_DEDUP_{n}_HASH | Provides a unique hash for each deduplication entry, which helps in recognizing recurring error signatures and preventing redundant alerts. |

Implementation and Results

The entire implementation process took only a few days, though we encountered several technical obstacles:

- Robust Stack Trace Capture: Obtaining reliable stack traces across various crash scenarios required multiple iterations of our signal handling mechanisms to ensure complete diagnostic information.

- Schema Optimization: Identifying the precise fields necessary for comprehensive root cause analysis involved careful refinement of the JSON schema sent to the agent-events backend.

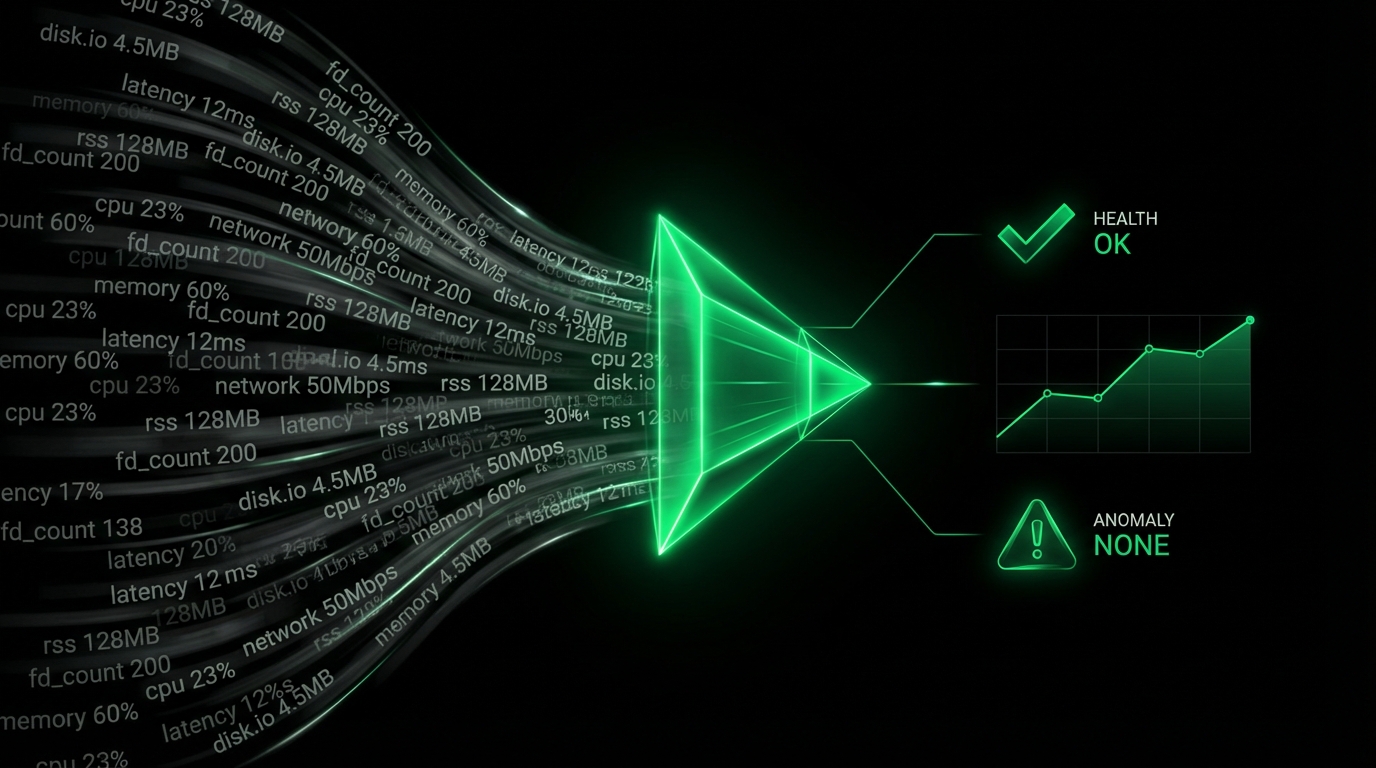

Once these challenges were resolved, this approach produced remarkable results. The Netdata Logs UI transformed into a powerful analytical platform. It became an exceptional analytical environment, allowing us to filter, correlate, and investigate any combination of events through powerful journald queries. We gained unprecedented visibility into our codebase’s behavior across diverse environments and configurations.

By implementing this system, we identified and resolved dozens of critical issues, dramatically improving Netdata’s reliability. The solution proved highly scalable—each backend instance processes approximately 20,000 events per second, with the ability to deploy additional instances as needed. From a resource perspective, the system requires only modest computing resources and storage capacity to maintain appropriate retention periods.

Conclusion

Our monitoring journey taught us that effective systems must balance customization, cost, and performance.

By combining a lightweight HTTP server with log2journal and systemd’s journald, we created a solution that captures every event without sampling or compromise.

This straightforward approach provides powerful insights while eliminating per-event costs, proving that native tools and thoughtful engineering can outperform expensive specialized solutions.

The system has already helped us identify and resolve dozens of critical issues, significantly improving Netdata’s reliability for our users worldwide.

As we continue to grow, this foundation will evolve with us, providing the visibility we need to maintain the highest standards of software quality.

FAQ

Q1: Do you collect IPs?

No, we don’t need IPs, and we don’t collect any.

Q2: Why do you collect normal restarts?

We only collect normal restarts when anonymous telemetry is enabled (enabled by default, users can opt-out). And we only collect at most one normal restart per day per agent (actually, we collect only one of all kinds of restarts/crashes per agent per day).

We collect normal restarts to understand the spread of an issue. We provide packages for a lot of Linux distributions, Linux kernel versions, CPU architectures, library versions, etc. For many combinations there are just a handful of users.

When we have just a few events reported, it is important to know if all of them are crashing, a few are crashing, or just a tiny percentage is crashing. It completely changes the context of the investigation needed.

So, the normal restarts act like a baseline for us to understand the severity of issues.

Q3: Why do you need the unique UUID of the agent?

UUIDs allow us to correlate events over time. How many events do we have for this agent? Is it always crashing with the same event? Was it working a few days ago? Did we fix the issue for this particular agent after troubleshooting?

Using these UUIDs, we can find regressions and confirm our patches actually fix the problems for our users.

Keep in mind that we don’t have the technical ability to correlate UUIDs to anonymous open-source users. So, these UUIDs are just random numbers for us.

Q4: Do you collect crash reports when anonymous telemetry is disabled?

No. We only collect crash reports when anonymous telemetry is enabled.

Q5: Does telemetry affect my system’s performance?

No. Telemetry is kicking in instantly when the agent starts, stops or crashes. Other than that, the agent is not affected.

Q6: Is any of my telemetry data shared with third parties or used for purposes beyond crash analysis?

All telemetry data is used exclusively for internal analysis to diagnose issues, track regressions, and improve Netdata’s reliability. We don’t share raw data or any personally identifiable information with third parties.

In some cases, these reports can be used to steer our focus. A great example is the breakdown per operating system that allows us to focus on providing binary packages for the distributions that are used more than others.

Q7: How long do you store the telemetry data?

We keep these data for 3 months, to identify how the different Netdata releases affect the stability and reliability of the agent over time.

Q8: Can I opt out of telemetry entirely, and what are the implications of doing so?

Of course. If you choose to disable it, you won’t contribute data that helps identify and resolve Netdata issues. Opting out might also mean you miss out on receiving some benefits derived from our improved diagnostics and performance enhancements.