Hadoop Distributed File System (HDFS) Monitoring

What Is HDFS?

The Hadoop Distributed File System (HDFS) is the primary data storage system used by Hadoop applications. It’s designed to store very large data sets reliably and to stream those data sets at high bandwidth to user applications.

Monitoring HDFS With Netdata

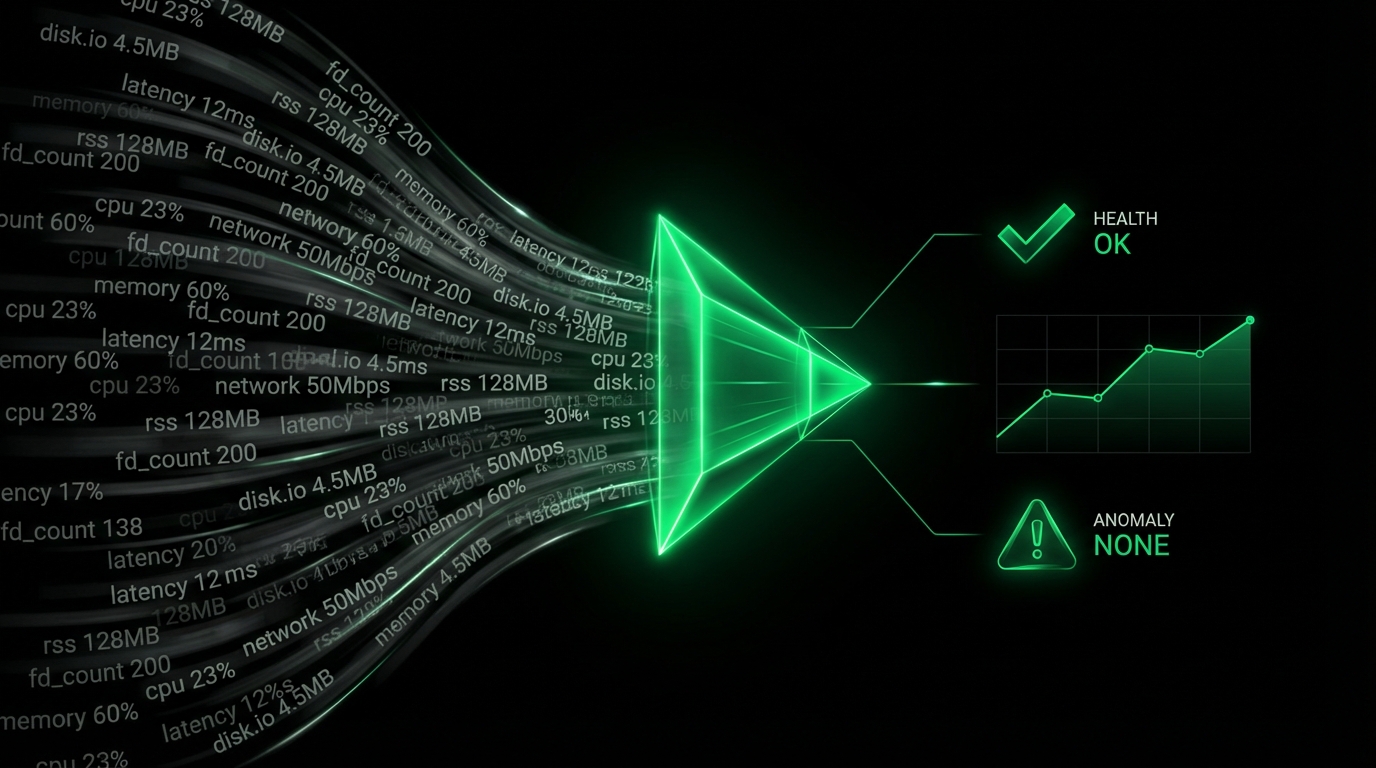

Monitoring HDFS is pivotal for ensuring data reliability and system performance. Netdata’s HDFS monitoring tool provides deep insights by efficiently gathering metrics via JMX from HDFS daemons. This ensures that any anomaly within HDFS is quickly detected and addressed.

Explore our Live Demo to see how you can monitor HDFS in real time using Netdata.

Why Is HDFS Monitoring Important?

Understanding the health and efficiency of your HDFS system can prevent data loss and improve performance. Ensuring capacity planning, detecting failed disks, and monitoring cluster health is essential for maintaining robust data landscapes.

What Are The Benefits Of Using HDFS Monitoring Tools?

Utilizing tools for monitoring HDFS, like Netdata, allows teams to visualize real-time metrics, set alerts, and diagnose issues before they escalate. These functionalities are crucial for maintaining high availability and ensuring optimal performance.

Understanding HDFS Performance Metrics

Effective HDFS monitoring requires understanding various metrics:

HDFS Heap Memory

Tracks memory usage and availability to prevent out-of-memory errors.

GC Events and Time

Monitors garbage collection events and processing time to ensure smooth operations.

Number of Threads

Tracks threading statistics across states to maintain efficient task execution.

RPC Bandwidth and Calls

Measures Remote Procedure Call (RPC) bandwidth and the number of calls to detect network bottlenecks.

Capacity Using Datanodes

Monitors the used and remaining capacity of datanodes to preemptively address storage issues.

| Metric | Description |

|---|---|

| hdfs.heap_memory | Memory utilization in MiB across different heap memory states. |

| hdfs.gc_count_total | Total GC events per second. |

| hdfs.rpc_bandwidth | RPC bandwidth in kilobits per second. |

| hdfs.capacity | Total and used capacity across datanodes in KiB. |

Advanced HDFS Performance Monitoring Techniques

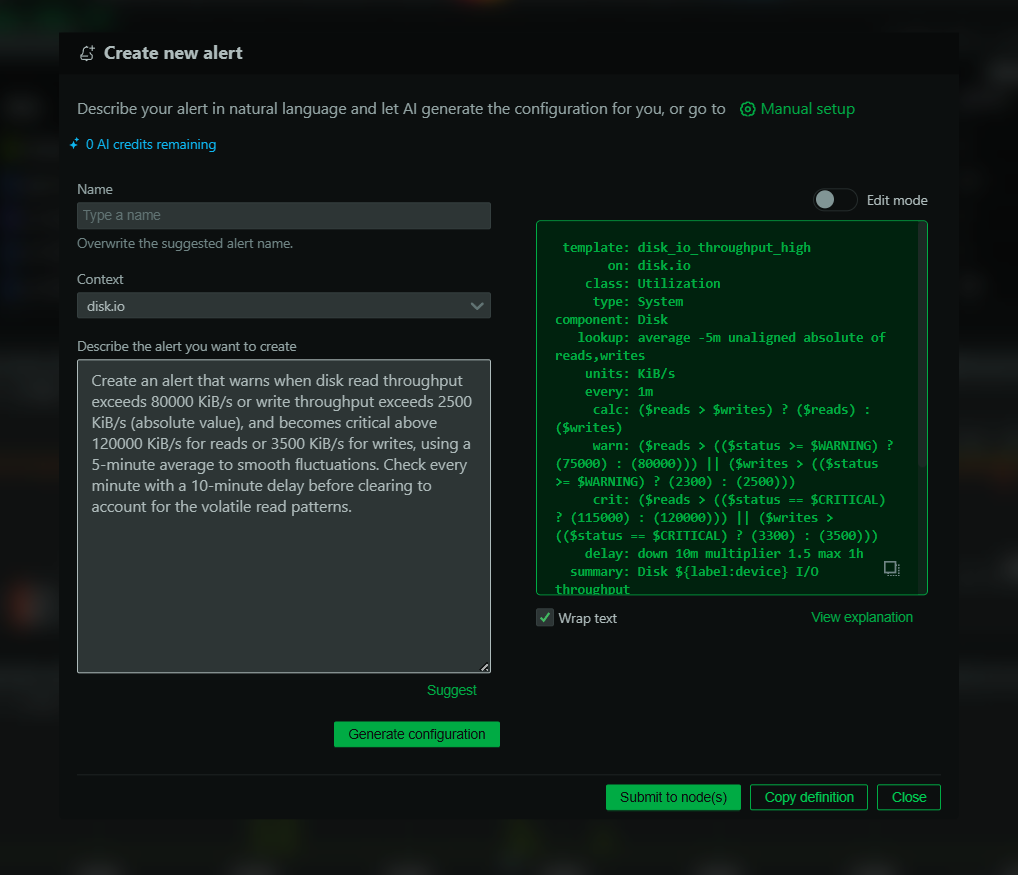

Advanced techniques include setting specific alerts for key metrics like hdfs.capacity and hdfs.blocks, creating custom dashboards, and integrating with alerting systems to handle thresholds efficiently.

Diagnose Root Causes Or Performance Issues Using Key HDFS Statistics & Metrics

Utilize both pre-defined and user-defined alerts to diagnose and resolve issues swiftly. Identifying root causes becomes easier with detailed metrics analysis and a comprehensive observability platform.

Get started on the path to simplified HDFS monitoring with Netdata. Sign up for a Free Trial and leverage a reliable HDFS monitoring tool.

FAQs

What Is HDFS Monitoring?

HDFS monitoring involves observing the system’s performance and health to ensure the Hadoop Distributed File System operates efficiently and reliably.

Why Is HDFS Monitoring Important?

By monitoring HDFS, organizations can prevent downtime, manage storage capacities efficiently, and ensure the system’s overall health aligns with data management goals.

What Does An HDFS Monitor Do?

An HDFS monitor aggregates real-time metrics, visualizes them, and can trigger alerts upon detecting anomalies.

How Can I Monitor HDFS In Real Time?

You can monitor HDFS in real time using Netdata’s robust monitoring solutions, which offer comprehensive dashboards and alerts.