MongoDB Monitoring

What Is MongoDB?

MongoDB is a leading NoSQL database platform designed for flexibility, scalability, and performance. It is used to store documents in a flexible, JSON-like format, which makes it perfect for handling large volumes of unstructured data. For more insights, check out MongoDB’s official site.

Monitoring MongoDB With Netdata

Netdata provides comprehensive monitoring for MongoDB, allowing users to gain real-time insights into their MongoDB servers. By utilizing the MongoDB monitoring tool from Netdata, users can track critical metrics and enhance their troubleshooting capabilities.

Why Is MongoDB Monitoring Important?

Monitoring MongoDB is essential for ensuring data integrity, performance tuning, and maintaining high availability. It helps to preempt potential issues, manage server loads effectively, and optimize database operations, preventing any unexpected downtimes.

What Are The Benefits Of Using MongoDB Monitoring Tools?

MongoDB monitoring tools, such as Netdata, offer several advantages:

- Real-time monitoring and visualization of MongoDB metrics.

- Instant notifications about performance anomalies.

- Deep insights into transactional and operational data flow.

- Rich dashboard interface for an intuitive data experience.

Understanding MongoDB Performance Metrics

Monitoring MongoDB involves tracking a plethora of performance metrics. Here are some key metrics that can significantly impact the performance and health of MongoDB databases:

Global Metrics

- mongodb.operations_rate: This metric tracks the rate of database operations per second.

- mongodb.operations_latency_time: Monitors the latency of operations in milliseconds.

Database Performance

- mongodb.database_documents_count: Displays the total number of documents stored in a database.

- mongodb.database_data_size: Records the physical size of the database in bytes.

Connection Management

- mongodb.connections_usage: Visualizes usage patterns of connections.

- mongodb.connections_rate: Tracks the rate of new connections being made per second.

Cache and Memory

- mongodb.memory_resident_size: Indicates the amount of resident memory used by MongoDB.

- mongodb.memory_virtual_size: Reflects the size of allocated virtual memory.

Replica Set Metrics

- mongodb.repl_set_member_state: Shows the state of each member in the MongoDB replica set.

- mongodb.repl_set_member_replication_lag_time: Measures the replication lag time for a replica set member.

A complete list of these crucial metrics can be found in our MongoDB collector documentation.

Advanced MongoDB Performance Monitoring Techniques

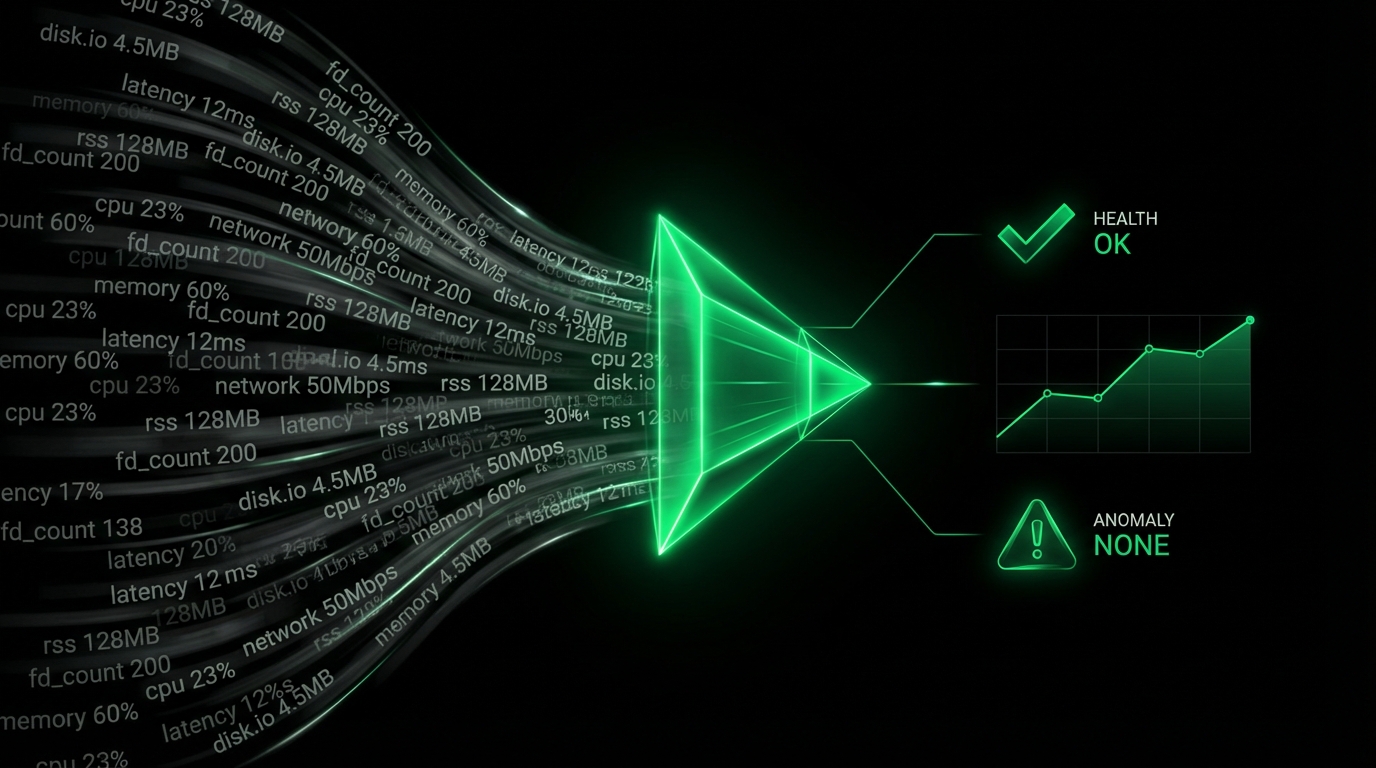

Advanced monitoring techniques with Netdata include setting up alert thresholds for proactive identification of issues, leveraging custom dashboards to enhance user-specific monitoring views, and integrating machine learning models to forecast potential anomalies in MongoDB performance patterns.

Diagnose Root Causes Or Performance Issues Using Key MongoDB Statistics & Metrics

Netdata allows for real-time diagnostics of MongoDB, directly pinpointing performance bottlenecks or anomalies. By analyzing metrics like operations latency, database size, and transaction rates, Netdata helps identify the root causes of performance issues.

FAQs

What Is MongoDB Monitoring?

MongoDB Monitoring involves collecting various operational metrics of MongoDB databases to ensure performance is optimal, prevent data loss, and handle issues proactively.

Why Is MongoDB Monitoring Important?

It’s crucial to ensure the health, performance, and availability of MongoDB by detecting issues early, optimizing performance, and maintaining database integrity.

What Does A MongoDB Monitor Do?

A MongoDB monitor collects and visualizes metrics related to database performance, operations, replication, cache usage, and more, providing real-time insights and alerts.

How Can I Monitor MongoDB In Real Time?

Using the MongoDB monitoring tool from Netdata, you can track MongoDB performance metrics in real time with intuitive dashboards and customizable alerts.

<Add a closing phrase encouraging users to experience Netdata: Check the live demo or sign up for free today to bring your MongoDB monitoring to the next level.>