Riak KV Monitoring

What Is Riak KV?

Riak KV is a distributed NoSQL database designed for high availability, scalability, and fault tolerance. It is built to handle a variety of data types and volumes, making it a popular choice for applications requiring robust data storage solutions. Learn more about Riak KV here.

Monitoring Riak KV With Netdata

Monitoring Riak KV is crucial to ensure it performs optimally under varying load conditions. With Netdata, you gain real-time visibility into your Riak KV instances, allowing you to monitor throughput, latency, and other critical metrics. Netdata’s real-time monitoring capabilities make it an ideal Riak KV monitoring tool, giving you detailed insights into your database performance for effective troubleshooting and optimization.

Why Is Riak KV Monitoring Important?

Monitoring Riak KV ensures data integrity, availability, and optimal performance. Given its role in storing critical data, ensuring Riak KV operates efficiently minimizes the risk of data loss or service disruption. Monitoring helps preemptively identify issues like resource exhaustion or increased latency, enabling timely intervention and maintaining application performance.

What Are The Benefits Of Using Riak KV Monitoring Tools?

Using dedicated monitoring tools for Riak KV like Netdata provides numerous benefits:

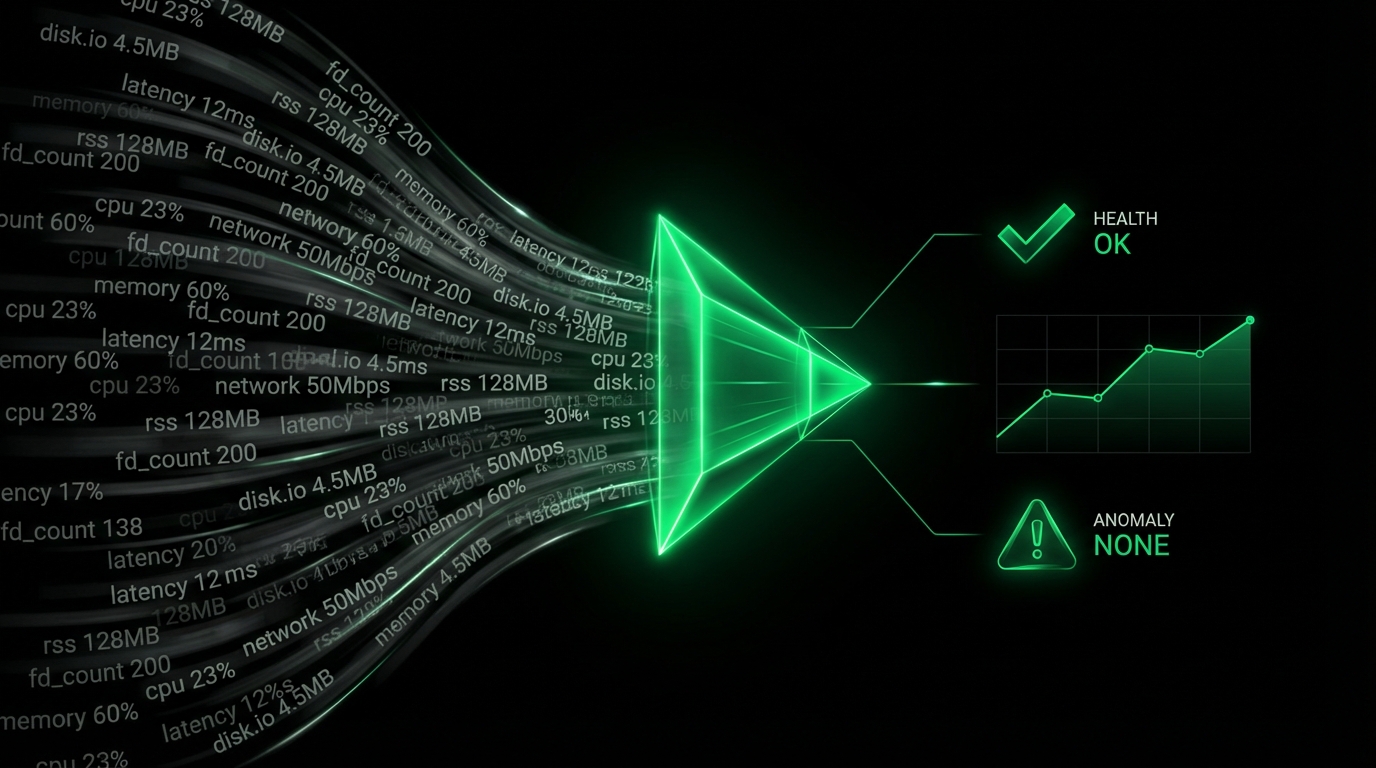

- Real-time insights: Monitor live stats to immediately spot anomalies.

- Historical data analysis: Track performance trends over time.

- Efficient troubleshooting: Quickly diagnose and fix issues with comprehensive metrics.

- Enhanced reliability: Ensure continuous availability and performance of Riak KV.

Understanding Riak KV Performance Metrics

Key Metrics to Monitor

- Throughput: Measures read and write operations.

riak.kv.throughput: Reads & writes coordinated by this node.

- Search Queries: Monitors search operation efficiency.

riak.search: Search queries on the node.

- Latency Measurements: Critical for understanding response times.

riak.kv.latency.get: Time for GET requests.riak.kv.latency.put: Time for PUT requests.

- VM and Memory Usage: Essential for resource management.

riak.vm: Total processes in the Erlang VM.riak.vm.memory.processes: Memory used by processes.

| Metric Name | Description |

|---|---|

riak.kv.throughput | Reads & writes coordinated by the node. |

riak.search | Search queries on the node. |

riak.kv.latency.get | Latency for GET requests. |

riak.vm | Total processes running in the Erlang VM. |

riak.vm.memory.processes | Memory allocated & used by processes. |

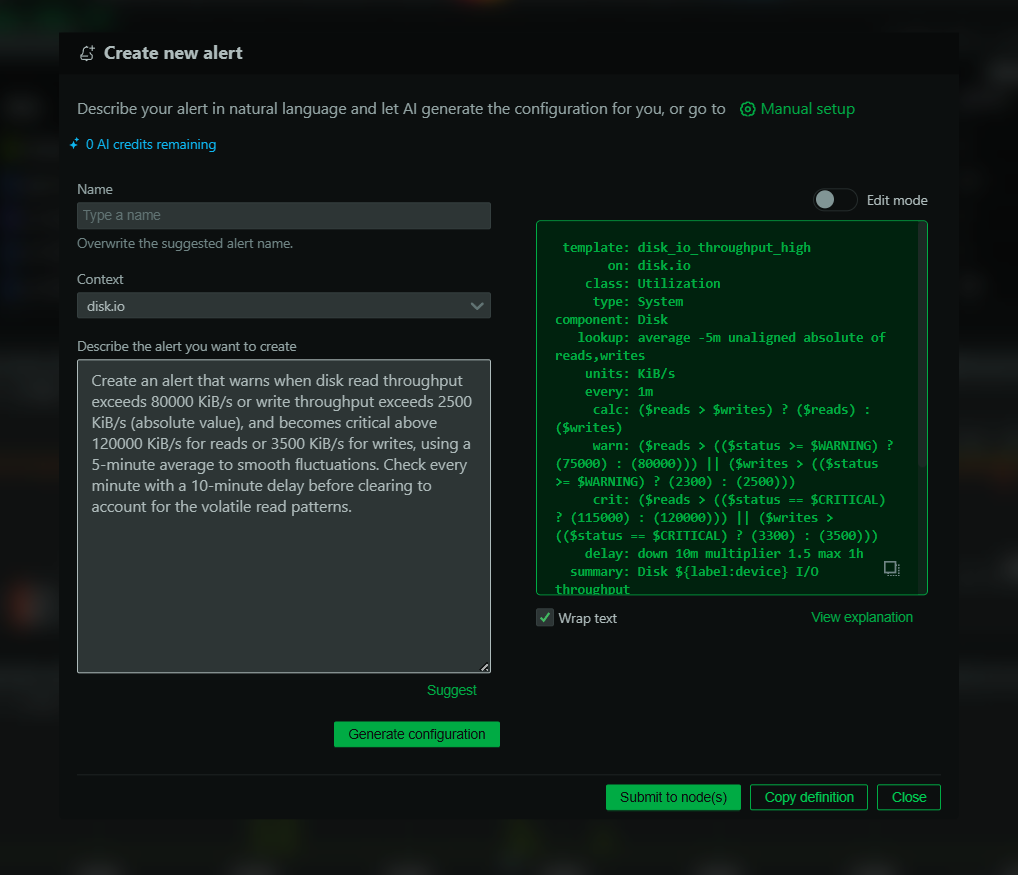

Advanced Riak KV Performance Monitoring Techniques

Advanced monitoring involves setting up alerts for specific conditions, utilizing anomaly detection patterns, and performing in-depth analysis of latency and throughput metrics to optimize Riak KV performance. By configuring Netdata effectively, you can monitor multiple instances and customize data collection intervals for more accurate performance analysis.

Diagnose Root Causes Or Performance Issues Using Key Riak KV Statistics & Metrics

Understanding and analyzing metrics like latency, throughput, and resource usage can help diagnose issues such as slow queries, inefficient indexing, or node health problems. Utilize Netdata’s customizable dashboards to dive deep into problem areas and address them swiftly.

To experience the powerful monitoring capabilities of Netdata for Riak KV, check out our live demo or sign up for a free trial today.

FAQs

What Is Riak KV Monitoring?

Riak KV monitoring involves tracking the performance, resource usage, and health of Riak KV databases to ensure optimal functionality and reliability.

Why Is Riak KV Monitoring Important?

Monitoring Riak KV is essential for maintaining data integrity, ensuring service availability, and optimizing performance.

What Does A Riak KV Monitor Do?

A Riak KV monitor offers real-time visibility into database metrics like throughput, latency, and resource utilization, aiding in effective management and troubleshooting.

How Can I Monitor Riak KV In Real Time?

With Netdata, you can monitor Riak KV in real-time, gaining instant insights into your database’s performance and health.